X

Marco Mezquida ‘Piano + AI’

The first show of the AI and Music Festival brings together an eminence of jazz and classical piano with a custom AI, in a co-creation between Sónar and Barcelona’s UPC.

The show, entitled “Piano + AI”, will be the world premiere of a work co-created by the famous pianist and composer Marco Mezquida together with UPC researchers Iván Paz and Philippe Salembier, in collaboration with the Escola Superior de Música de Catalunya (ESMUC). The piece will promote a live ‘conversation’ between the piano and artificial intelligence, using real-time audio analysis and digital sound synthesis.

A virtuoso pianist, at home with both classical music and jazz, and an excellent improviser, throughout his career Marco Mezquida has played with instrumentalists of all genres, and this time, his collaborator on stage will be an AI.

Marco Mezquida "Piano + AI" is a co-creation project with scientists and engineers from the Polytechnic University of Catalonia (UPC) who have developed an artificial intelligence instrument that recognizes the different techniques of the pianist and responds in real time with different synthesized soundscapes, generating a feedback between the pianist and the artificial intelligence.

Iván Paz, musician and researcher specialized in the use of artificial intelligence within the practice of livecoding, and Philippe Salembier, engineer specialized in digital signal and image processing, have worked on the project.

Josep Maria Comajuncosas, musician, computer engineer and professor at ESMUC (Escuela Superior de Música de Catalunya), and Joan Canyelles (Shelly), musician and engineer in audiovisual systems, collaborated in the sound design.

The rehearsals of the concert have been carried out at ESMUC and the collaboration of the pianist Anna Francesch has also been counted with the collaboration of the pianist Anna Francesch.

co-created by Sónar and UPC

Demo Research 1:wAIggledance

wAIggledance

Creative AI is focused on the use of machine learning and deep learning, and it’s refreshing to discover projects that borrow tools and concepts from complex systems and swarm intelligence, a collective behaviour of self-organising systems, whether natural (a bee swarm, hence the name) or artificial.

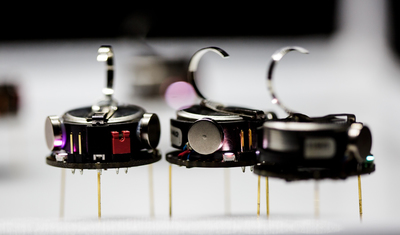

wAIggledance researches the aesthetic and philosophical implications of collectivity and agent self-organization from a swarm of “kilobots”, small robots that can execute simple algorithms, that move by engine vibration, and that can exchange messages with their neighbors in a radius of approximately 10 centimeters.

In movement, they generate sounds that, depending on the level of synchronization in their movement, rhythmic patterns emerge.

LP Duo presents: Beyond Quantum Music

Since 2004 the Serbian classical piano duo of Sonja Loncar & Andrija Pavlovic have experimented with the endless possibilities of performing on two pianos, with their explosive interpretations of classical works earning them a reputation somewhere between that of a chamber orchestra and a rock band.

At the AI and Music S+T+ARTS festival, the duo will present a remote video performance that captures their ongoing interest in quantum physics and generative audio. Titled ‘Beyond Quantum Music’ the piece references their eponymous art & science research project along with partners University of Oxford, Ars Electronica Linz, TodaysArt Festival, Institute of Musicology SASA Belgrade, Universities of Aarhus and Hannover; blurring the lines between composition, interpretation and performance.

in collaboration with Quantum Music

Demo Research 2: Polifonia

Polifonia. Playing the soundtrack of our history.

From the soundscape of Italian historical bells, to the influence of French operas on traditional Dutch music, European cultural heritage hides a goldmine of unknown encounters, influences and practices that can transport us to experience the past, understand the music we love, and imagine the soundtrack of our future.

This is the goal of Polifonia, an initiative of the Università di Bologna that wants to provoke a paradigm shift in Musical Heritage preservation policies, management practice, research methodologies, interaction means and promotion strategies.

To achieve this, Polifonia will develop computing approaches that facilitate access and discovery of European Musical Heritage and enable a creative reuse of musical heritage at-scale, connecting both the tangible heritage (instruments, theaters..) and intangible, and connecting previously unconnected data.

This research project will be presented by the Professor of Università di Bologna, and lead of the project, Valentina Presutti.

Human Brother

The brainchild of internationally renowned composer Ferran Cruixent and Artur Garcia, a key researcher at the Barcelona Supercomputing Center, Human Brother is a work for soprano and orchestra that explores the relationship between humans and artificial intelligence.

A collaboration between one of the foremost voices in contemporary classical music and a member of the team building BSC’s first quantum computer, this collision between seemingly separate worlds is itself a metaphor for the creative possibilities of random probabilities - creating art from science.

in collaboration with Barcelona Supercomputing Center

YACHT

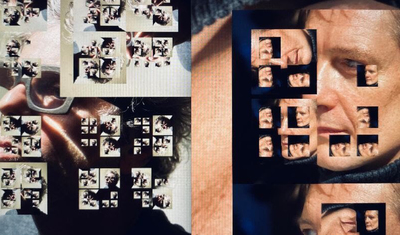

To compose their GRAMMY-nominated album Chain Tripping, post-pop trio YACHT created a “bespoke and janky” technique of algorithmic collage, drawing from a variety of machine learning models to generate the melodic and lyrical patterns they used as source material for a cut-and-paste compositional process.

In doing so, they held fast to self-imposed rules: everything had to be generated from their own back catalog, with no space for improvisation or “jamming.” These constraints kept the band searching for creative workarounds and subversions within the limitations of the technology itself. For the AI and Music Festival, YACHT will debut an entirely new audio-visual composition that represents a devolution of these techniques, employing new tools and a freer range of expressive choices. With this new “megamix,” YACHT will break their own rules—taking the best of what machine learning offers as a creative tool while allowing space for the unexpected, the inexplicable, and the human.

Bot Bop - Integers & Strings

BotBop was formed in 2019 on special request of Belgian arts center BOZAR in Brussels,

specically to research new artistic directions in music, using state of the art techniques in the field of articial intelligence.

Electronic wind instrument and modular patching acionada Andrew Claes and live coding geek Dago Sondervan were scouted to prepare a special performance in co-production with Ars Electronica. Research engineer Kasper Jordaens joined the team providing further A.I. and Machine Learning technical assistance and BotBop was born.

In this second iteration of the BotBop saga, Dago Sondervan, Andrew Claes and Kasper Jordaens team up again for a new challenge . This time incorporating a classical string quartet as a vehicle for exploring new possibilities in a staggering real time generated, computer assisted live audio-visual performance.

The second iteration of Bot Bop’s exploratory journey into the artistic potential of A.I. will deepen the focus incorporating real-time string arrangement and its visual representation.

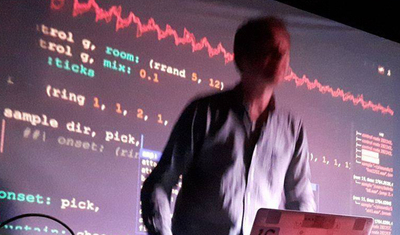

Again, live coding expert Dago Sondervan, who uses multiple live coding environments at the same time, and accomplished wind-controller + patching adept Andrew Claes, will team up with Kasper Jordaens, arts R&D engineer. This time creating an interactive environment where improvisation and computer aided composition can overflow.

Continuing development of BotBop’s custom software and implementations using primarily open source software, enables real-time score generation and its extrapolation to individual parts for a string quartet.

A fully real-time generated score will be developed guiding the string quartet, to be further visually manipulated by Jordaens and projected onto a backdrop screen, highlighting what’s generated as well as adding a layer of immersion through graphic art.

The performance will consist of five separate movements, each outlining a specific idea. Different strategies, including euclidean divisions, fibonacci sequences and other mathematical structures, will be used to generate rhythms, whereas A.I. moderated melodic content, based on machine learning algorithms, will be utilised to control adjustments and mutations of the generated ‘compositions’.

A plethora of harmonic systems will be evaluated, mainly inspired by contemporary masters like Bartók and Shostakovich alike, breeding a futuristic hybrid electronic and acoustic esthetic.

Performers on stage will be: Andrew Claes, Dagobert Sondervan, Kasper Jordaens, Pieter Jansen, Herlinde Verjans, Jasmien Van Hauthem, Romek Maniewski-Kelner.

a co-production of Sónar and Bozar

DIANA AI Song Contest

A collaboration between Sónar and ℅ Pop Festival in Cologne, DIANA Song Contest is the brainchild of Jovanka v. Wilsdorf, a Berlin-based musician, artist producler, and songwriter at BMG Rights Management. The audiovisual piece follows the competition and performances of the second annual DIANA Song Contest, a collaborative AI and songwriting camp where the music is created live and on location. The project’s mission is to actively promote the bcreative dialogue between musicians and AI software, and to kick off inspired collaborations as well as strong songs going beyond genre bubbles.

presented by c/o pop festival

Hexorcismos

Electromagnetic fields and electricity are the life-force of the digital realm and Artificial Intelligence. In this exclusive performance for the AI and Music Festival, Hexorcismos, aka multidisciplinary Berlin based artist Moises Horta, takes a multi sensory approach for an animistic ritual featuring A.I. synthesized visuals and sounds, alongside the chilling effects of electromagnetic microphones; exploring the non-human sonic qualities of digital devices.

Recorded live in the XR room at Factory Berlin, these energies are channeled through a techno- shamanistic ritual, into an intense soundscape aided by a custom-built neural network powered Djembe A.I.

in collaboration with Factory Berlin

Chameleon’s Blendings performed by a OBC’s String Quartet and pianist Fani Karagianni

For this exclusive audiovisual piece, presented by the AI investigation and communication platform Artificia, we go behind the scenes of Thessaloniki Universities AI Chameleon, a GAN capable of generating musical harmonies that can be interpreted by various musicians. In the 15 minute capsule, a chamber quartet of musicians from Barcelona’s OBC and the Greek pianist Fanny Karagianni will play versions of these harmonies in an interaction between human creation and algorithmic composition.

in collaboration with Artificia

Interpreting Quantum Randomness: Concept by Reiko Yamada and Maciej Lewenstein

If music is mathematics, then it holds to reason that by applying advanced mathematical theory to the structure of music itself, hitherto undiscovered sounds will emerge. This is the broad conceptual framework underlying the work of Japanese composer and sound artist Reiko Yamada, whose ongoing work ‘Of Randomness and Imperfection’, seeks to produce unique sound events from the genuine quantum true randomness of quantum physical systems.

Developed with Prof. Dr. Maciej Lewenstein (ICFO, Barcelona) the resulting show ‘Interpreting Quantum Randomness’, presents the results of this research - a series of radically new timbres and frequencies, created by the application of the principles of quantum theory to music, an aesthetic approximation of the level of quantum randomness that exists in the natural world.

in collaboration with ICFO

Hongshuo Fan ‘Metamorphosis’

Selected following an open-call for projects at the intersection of artificial intelligence and musical performance, Hongshuo Fan’s Metamorphosis is a real-time interactive audio-visual composition for one human performer and two artificial intellectual performers, based around human and machine improvisations on the ancient chinese percussion instrument Bianqing (磬). During the course of the performance, the instrument’s sound, and shape, will constantly evolve, based on the interaction and interpretation of the human and machine players. From ancient to modern, concrete to abstract, the fusion of sound, image and live performance create an immersive experience exploring the dramatic shift and co-evolution between humans and AI.

Franz Rosati ‘Latentscape’

Musician, digital artist and lecturer Franz Rosati presents his latest project ‘Latentscape’ at the AI and Music Festival.

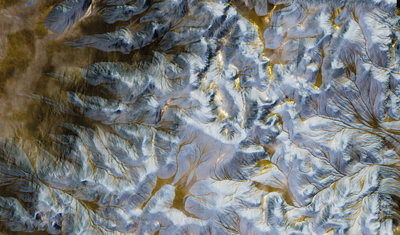

The work, explicitly designed as an performable AV concert series depicts the exploration of virtual landscapes and territories, supported by music generated by machine learning tools trained on traditional, folk and pop music with no temporal and cultural limitations. Employing GAN’s trained on datasets of publicly available satellite imagery, (and deliberately manipulated to encourage randomness), the work aims to de-contextualize and question the notion of geographical, and cultural, borders and boundaries, resulting in a work where streams of pixels take the form of cracks, erosions or mountains, and where colors and textures are the virtual representation of memories and emotions that the territory expresses and carries with it through its shape.

Demo Research 3: ‘Jazz as Social Machine’

Of all possible music, jazz is the most elusive genre when it comes to AI emulation: the energy of wind instruments is a challenge for neural networks, the virtuosity displayed by jazz performers is not easy for algorithms either, not to mention the social and improvisational nature of jazz: artificial intelligences cannot yet interpret the gestures, glances and other interactions with which jazz musicians agree to play.

Within this context is a research project called "Jazz As Social Machine", an initiative of the Alan Turing Institute led by Dr. Thomas Irvine, where they investigate the social interactions that take place within improvisation, as well as the historical memory of jazz, which each performer carries with him and which is not yet transferable to the more complex neural network.

Nabihah Iqbal and Libby Heaney

‘The Whole Earth Chanting’ is a live performance and recorded work by artist and quantum physicist Libby Heaney and performer and ethnomusicologist Nabihah Iqbal. The work uses the power of voice, sound and music, and the intimacy of performance to explore new expressions of belonging and collective identity between humans and non-humans - a post-human spiritualism, entangling human perception with the material world.

A live collaboration between a generative AI, trained on examples of chants, both ‘human and non-human’ and the live synths and vocals of Nabihah Iqbal, the piece pushes against widespread uses of artificial intelligence to manage (supposed) risks in the service of the status quo. As religious chants blur with football fans’ singing, birds and Iqbal's voice, the boundaries of categories through which we usually understand the world are dissolved, creating a transcendental journey enabling the ‘other’ to enter and transform.

This work was originally commissioned and performed at Radar Loughborough as part of it’s Risk Related programme.

AWWZ b2b AI DJ

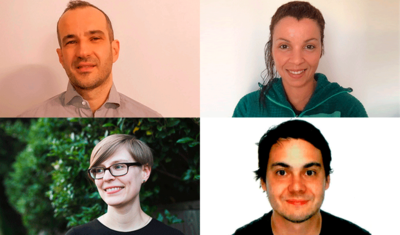

One of the three specially created co-creations at the Festival, AWWZ b2b AI Dj is a unique collaboration between dj and producer Awwz, and a team of researchers from the UPC: Mireia de Gracia, Casimiro Pio and Marta Ruíz Costa-Jussà.

Taking place at the intersection of AI genre classification, performance and audience participation, a specially trained AI will translate youtube comments into song suggestions. The resulting track selections will then be chosen by the DJ in a collaborative process, inviting reflection on questions of musical taste and the ability of a dj to ‘read’ an audience, as well as pointing the way forward to the future of djing.

co-created by Sónar and UPC

Holly Herndon presents Holly+ featuring Maria Arnal, Tarta Relena and Matthew Dryhurst

Already a conceptual avatar for what’s possible with algorithmic and AI assisted sound design, the artist, musician and researcher Holly Herndon, has made this a (virtual) reality, with the unveiling of her latest project Holly+.

An AI based tool for processing polyphonic sound, backed by a (DAO) Decentralized Autonomous Organization underpinned by the blockchain), Holly+ is a digital audio likeness of the artist that ‘sings’ uploaded audio in the artists own voice. For this special presentation at the AI and Music Festival, Holly will run a residency at Barcelona’s Hangar with two of the cities most forward thinking vocal artists - Maria Arnal and Tarta Relena - before presenting the results in a live show by Holly+ at the festival along with her creative partner Mat Dryhurst.

An unmissable demonstration of the creative potential of AI, and of the power of the human voice.

Rob Clouth A/V Live

Working at the vanguard of generative music, image and sound design, Rob Clouth is a Barcelona based artist and software developer whose work rethinks how we interact with music and technology. At the AI and Music Festival, Clouth will present his new audiovisual project Zero Point, a work that explores themes of chance, chaos, coincidence and the ephemeral, using the zero-point energy from quantum mechanics as a unifying concept.

Utilizing a generative 3D system he has coded himself, Clouth has created a unique visual language of shimmering, morphing spaces with perceived scales that shift seamlessly from the subatomic up to the planetary - a reflection on the ubiquity of zero-point energy that extends into every corner of the universe

Hamill Industries & Kiani del Valle ‘ENGENDERED OTHERNESS. A symbiotic AI dance ensemble’

A spectacular co-creation between visual artists Hamill Industries, award winning choreographer Kiani del Valle and a team of AI specialists from the UPC, ‘Engendered Otherness - A symbiotic AI dance ensemble’, brings generative audio, computer vision and dance together in an audiovisual show that pushes the limits of the human body into new territory thanks to the possibilities of AI.

The work represents another high-point for Hamill Industries, the prestigious Barcelona based visual design studio run by Pablo Barquín and Anna Diaz. Mixing programing, robotics and video techniques to explore concepts of nature, the cosmos and the laws of physics, their work has been featured and lauded by artists such as Floating Points and festivals such as Ars Electronica. For this work they unite for the first time with the Puerto Rican born choreographer Kiani del Valle, founder of KDV Dance Ensemble who has worked with Matthew Dear, Dirty Projectors, Kasper Bjørke, Unknown Mortal Orchestra and Clark.

A team of AI specialists from the UPC University has worked on the scientific part. The team is formed y Stefano Rosso, energy and sustainability graduate, data scientist and collaborator of the artist Sofia Crespo; Martí de Castro, industrial engineer specialised in computer vision and PhD candidate in artificial intelligence, and Javier Ruiz Hidalgo, researcher and PhD in image processing.

co-created by Sónar and UPC

Mouse On Mars ‘AAI Live’

The pioneering German duo of Jan St. Werner and Andi Toma have been at the forefront of experimental electronic music for over 25 years. Their latest project draws on this rich legacy of experimentation to offer a radical reading of our present creative moment, exploring artificial intelligence as both a narrative framework and compositional tool.

Created in collaboration with writer and scholar Louis Chude-Sokei as well as the AI Architecture collective Birds on Mars, at the core of the project is a bespoke software capable of modelling speech, which, operated as an instrument by St.Werner and Toma, adds an extra layer of creativity (and conceptual ambiguity), to the process. The result is a work that questions the very nature of authorship and originality, while showcasing the incredible creative potential of AI tools in electronic composition.

SYNC by Albert van Abbe

Built in the Brainport Eindhoven, the first AI hardware sequencer prototype was conceived by Albert van Abbe, developed with Mathias Funk (Associate Professor at the University of Technology) and prototyping company BMD among many other partners.

A perfect pandemic project for soundartist Van Abbe, SYNC is a hyper-personal machine that generates new (midi) notes out of data from music he did over the past 20 years. SYNC will trigger synthesisers & drum computers as well as (led) light systems and even video in real-time simultaneously.

A self-titled veteran ‘Sound Builder’ Albert van Abbe has just about covered the whole field of electronic music over the course of the last 20 years; from minimalistic sound installations to uncompromising hardcore acid productions, a long list of collaborative works, institutional partnerships and public projects with artists of various mediums add to an exciting list of performances both inside and outside the club.

Videographer: Mark Richter

Recorded at Grey Space (The Hague)

Presented in collaboration with TodaysArt

DanzArte - Emotional Wellbeing Technology

DanzArte teaches us to see a work of art through to non-verbal communication, sound and dance gestures. It is a culture and health technological innovation project, an interactive system of artificial intelligence, technology and emotional well-being.

Small groups are invited to emulate the affective movement evoked in a painting, while an artificial intelligence system measures in real time the emotional cues of the body movement, in order to use them in a visual manipulation of the painting and to sonify the emotion contained in the movement.

The project is designed for the physical and cognitive treatment of the elderly and frail. The affective gestures evoked in the painting and the interactive sonification are stimuli for cognitive and physical reactivation.

DanzArte is a project of Casa Paganini - InfoMus. Founded in 1984, it carries out scientific and technological research, development of multimedia systems and applications.

Its research focuses on the understanding and development of computational models of non-verbal expression, crossing humanistic, scientific and artistic theories.

X

Musicians learning Machine Learning, a friendly introduction to AI

What is a neural network? How do you train it? What is the difference between machine learning and deep learning? What is and what isn’t artificial intelligence?

At the ‘Musicians learning machine learning. A friendly introduction to AI’ session, we will discover the basics of AI through musical examples with the participation of the scientist and developer Rebecca Fiebrink, researcher Stefano Ferretti, the researcher and engineer specialising in voice Santiago Pascual, the computer scientist from the Urbino University Stefano Ferretti, and the data scientist at NTT DATA Nohemy Pereira-Veiga.

At the end of the sessions we won’t be able to program a robot, but AI will hide less mysteries from us.

With the support of the British Council Spain.

AI and future music genres

Technology has defined musical sounds, structures, genres and styles throughout the centuries, and in 2021 we are pretty much sure that AI will define the history of music for years to come, and that it will gather audiences that will identify with this new music made with AI.

Participating in the discussion are: Libby Heaney (artist, coder, quantum physicist), Nabihah Iqbal (musician, musicologist), Jan St. Werner of the band Mouse on Mars, and Marius Miron computer scientist at the intersection of music technology and AI and transparency and fairness (Music Technology Group -UPF)

With the support of the British Council Spain.

Hackathon outcome presentations

AI and Music S+T+ARTS Festival Hackathon outcome presentations

The AI and Music S+T+ARTS Festival began on the 23rd of October with two 24h hackathons at UPC Campus Nord. NTT DATA sponsored a Live Coding Hack developed in coordination with the UPC and Sokotech, and Ableton sponsored Educational Hack in collaboration with the UPC and Hangar.

The selected teams competed for prizes, and the winners will present their outcomes live on-stage at the AuditoriCCCB by NTT DATA on October the 28th

Make way for the new instruments!

AI will reveal the true nature of digital instruments and will define the music of the future. Electronic instruments appeared to “imitate” acoustic instruments and they found their true personality when they stopped imitating the acoustic ones (think an electric guitar, or an organ, or a drum machine).

Let's make way for the new music instruments together with our panelists Douglas Eck, computer scientist and researcher at Google Magenta; Rob Clouth, musician, visual artist and developer, specialising in digital music tools; Agoria, dj, producer and curious mind; and Koray Tahiroglu, artist and researcher at Aalto University.

With the support of the British Council Spain.

Teaching Machines to feel like Humans

Music is mathematics and physics, but this is not the reason why we like it. We like music because it makes us feel things, so it’s crucial to explore human perception in order to train the machines and make music instruments and tools that allow us humans to play music expressively.

This is a session to discover how machines are trained to listen, see and feel like humans do.

We will count with the presence of the scientist and director of the MIT’s Artificial Intelligence and Decision Making Faculty ( AI+D), Antonio Torralba; with Luc Steels, researcher professor at Institut de Biologia Evolutiva (UPF-CSIC) Barcelona and AI pioneer in Europe; Ioannis Patras, Professor in computer vision and human sensing at the Queen Mary University of London; and Shelly Knotts, artist and improviser working with humans and comptuters, with a background as an academic reserching the use of AI in improvisation and compostition.

With the support of the British Council Spain.